北京大学学报(医学版) ›› 2020, Vol. 52 ›› Issue (6): 1107-1111. doi: 10.19723/j.issn.1671-167X.2020.06.020

基于三维动态照相机的正常人面部表情可重复性研究

- 北京大学口腔医学院·口腔医院,口腔颌面外科 国家口腔疾病临床医学研究中心 口腔数字化医疗技术和材料国家工程实验室 口腔数字医学北京市重点实验室,北京 100081

Evaluation of the reproducibility of non-verbal facial expressions in normal persons using dynamic stereophotogrammetric system

Tian-cheng QIU,Xiao-jing LIU,Zhu-lin XUE,Zi-li LI( )

)

- Department of Oral and Maxillofacial Surgery, Peking University School and Hospital of Stomatology & National Clinical Research Center for Oral Diseases & National Engineering Laboratory for Digital and Material Technology of Stomatology & Beijing Key Laboratory of Digital Stomatology, Beijing 100081, China

摘要:

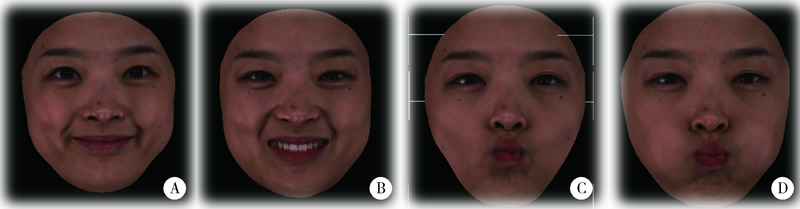

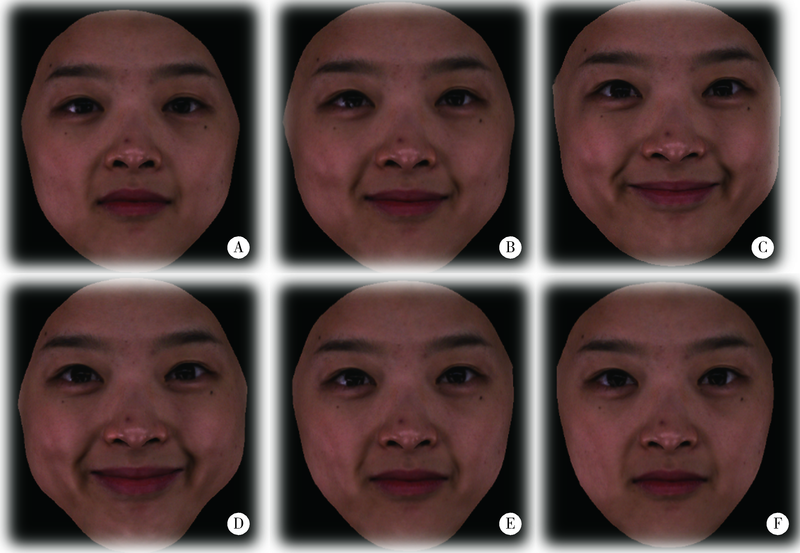

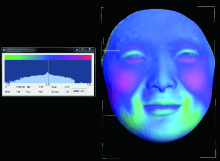

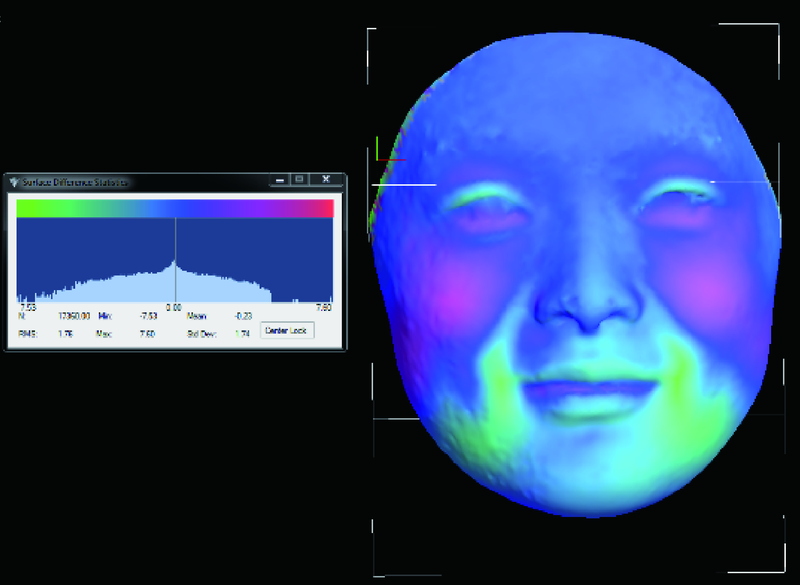

目的:测量正常人群表情运动的可重复性,为患者手术等干预措施的效果评价提供参照数据。方法:征集面部结构大致对称、无面部运动及感觉神经障碍病史的志愿者共15名(男性7名,女性8名,中位年龄25岁)。使用三维动态照相机记录研究对象的面部表情运动(闭唇笑、露齿笑、噘嘴、鼓腮),分辨率为采集频率60帧/s,挑选每个面部表情中最有特征的6帧图像,分别为静止状态时图像(T0)、从静止状态至最大运动状态时的中间图像(T1)、刚达到最大运动状态时的图像(T2)、最大运动状态将结束时的图像(T3)、最大运动状态至静止状态时的中间图像(T4)及动作结束时的静止图像(T5)。采集两次面部表情三维图像数据,间隔1周以上。以静止图像(T0)为参照,将运动状态系列图像(T1~T5)与之进行图像配准融合,采用区域分析法量化分析前后两次同一表情相同关键帧图像与对应静止状态三维图像的三维形貌差异,以均方根(root mean square,RMS)表示。结果:闭唇笑、露齿笑以及鼓腮表情中,前后两次的对应时刻(T1~T5)图像与相应T0时刻的静止图像配准融合,计算得出的RMS值差异无统计学意义。撅嘴动作过程中,前后两次T2时刻对应面部三维图像与相应T0时刻静止图像配准融合,得出RMS值差异有统计学意义(P<0.05),其余时刻的图像差异无统计学意义。结论:正常人的面部表情具有一定的可重复性,但是噘嘴动作的可重复性较差,三维动态照相机能够量化记录及分析面部表情动作的三维特征。

中图分类号:

- R782.2

| [1] |

Mehrabian A, Ferris SR. Inference of attitudes from nonverbal communication in two channels[J]. J Consult Psychol, 1967,31(3):248-252.

doi: 10.1037/h0024648 pmid: 6046577 |

| [2] |

House JW, Brackmann DE. Facial nerve grading system[J]. Otolaryngol Head Neck Surg, 1985,93(2):146-147.

doi: 10.1177/019459988509300202 pmid: 3921901 |

| [3] |

Popat H, Richmond S, Zhurov AI, et al. A geometric morphometric approach to the analysis of lip shape during speech: Development of a clinical outcome measure[J]. PLoS One, 2013,8(2):e57368.

doi: 10.1371/journal.pone.0057368 pmid: 23451213 |

| [4] |

Hallac RR, Feng J, Kane AA, et al. Dynamic facial asymmetry in patients with repaired cleft lip using 4D imaging (video stereophotogrammetry)[J]. J Craniomaxillofac Surg, 2017,45(1):8-12.

doi: 10.1016/j.jcms.2016.11.005 pmid: 28011182 |

| [5] |

Al-Hiyali A, Ayoub A, Ju X, et al. The impact of orthognathic surgery on facial expressions[J]. J Oral Maxillofac Surg, 2015,73(12):2380-2390.

doi: 10.1016/j.joms.2015.05.008 pmid: 26044608 |

| [6] |

Popat H, Richmond S, Marshall D, et al. Three-dimensional assessment of functional change following class 3 orthognathic correction: A preliminary report[J]. J Craniomaxillofac Surg, 2012,40(1):36-42.

doi: 10.1016/j.jcms.2010.12.005 pmid: 21377887 |

| [7] |

Shujaat S, Khambay BS, Ju X, et al. The clinical application of three-dimensional motion capture (4D): A novel approach to quantify the dynamics of facial animations[J]. Int J Oral Maxillofac Surg, 2014,43(7):907-916.

pmid: 24583138 |

| [8] |

Bell A, Lo TW, Brown D, et al. Three-dimensional assessment of facial appearance following surgical repair of unilateral cleft lip and palate[J]. Cleft Palate Craniofac J, 2014,51(4):462-471.

doi: 10.1597/12-140 pmid: 23369016 |

| [9] |

Sawyer AR, See M, Nduka C. Assessment of the reproducibility of facial expressions with 3-d stereophotogrammetry[J]. Otolaryngol Head Neck Surg, 2009,140(1):76-81.

doi: 10.1016/j.otohns.2008.09.007 pmid: 19130966 |

| [10] |

Johnson PC, Brown H, Kuzon WM, et al. Simultaneous quantitation of facial movements: The maximal static response assay of facial nerve function[J]. Ann Plast Surg, 1994,32(2):171-179.

doi: 10.1097/00000637-199402000-00013 pmid: 8192368 |

| [11] |

Gross MM, Trotman CA, Moffatt KS. A comparison of three-dimensional and two-dimensional analyses of facial motion[J]. Angle Orthod, 1996,66(3):189-194.

doi: 10.1043/0003-3219(1996)066<0189:ACOTDA>2.3.CO;2 pmid: 8805913 |

| [12] |

Trotman CA, Faraway JJ, Silvester KT, et al. Sensitivity of a method for the analysis of facial mobility. I. Vector of displacement[J]. Cleft Palate Craniofac J, 1998,35(2):132-141.

pmid: 9527310 |

| [13] |

Alagha MA, Ju X, Morley S, et al. Reproducibility of the dyna-mics of facial expressions in unilateral facial palsy[J]. Int J Oral Maxillofac Surg, 2018,47(2):268-275.

pmid: 28882498 |

| [14] |

Alqattan M, Djordjevic J, Zhurov AI, et al. Comparison between landmark and surface-based three-dimensional analyses of facial asymmetry in adults[J]. Eur J Orthod, 2015,37(1):1-12.

doi: 10.1093/ejo/cjt075 pmid: 24152377 |

| [15] |

Ju X, O’Leary E, Peng M, et al. Evaluation of the reproducibility of nonverbal facial expressions using a 3D motion capture system[J]. Cleft Palate Craniofac J, 2016,53(1):22-29.

doi: 10.1597/14-090r |

| [1] | 赵双云, 邹思雨, 李雪莹, 沈丽娟, 周虹. 中文版口腔健康素养量表简版(HeLD-14)在学龄前儿童家长中应用的信度和效度评价[J]. 北京大学学报(医学版), 2024, 56(5): 828-832. |

| [2] | 凌晓彤,屈留洋,郑丹妮,杨静,闫雪冰,柳登高,高岩. 牙源性钙化囊肿与牙源性钙化上皮瘤的三维影像特点[J]. 北京大学学报(医学版), 2024, 56(1): 131-137. |

| [3] | 张雯,刘筱菁,李自力,张益. 基于解剖标志的鼻翼基底缩窄缝合术对正颌患者术后鼻唇部形态的影响[J]. 北京大学学报(医学版), 2023, 55(4): 736-742. |

| [4] | 温奥楠,刘微,柳大为,朱玉佳,萧宁,王勇,赵一姣. 5种椅旁三维颜面扫描技术正确度的初步评价[J]. 北京大学学报(医学版), 2023, 55(2): 343-350. |

|

||